Remove a folder - twice

How hard can it be to remove a folder in Windows? Part 4: random errors on empty folder

Random errors on empty folder

So it’s all good, we wrote our function to enumerate and recursively delete

sub-folders and files, handled errors such as permission denied and read-only

files and still: from time to time there are random errors with the error

ERROR_DIR_NOT_EMPTY. Investigation shows that after the error occurred the

directory is empty actually and can be deleted manually. What’s happening?

Stackoverflow

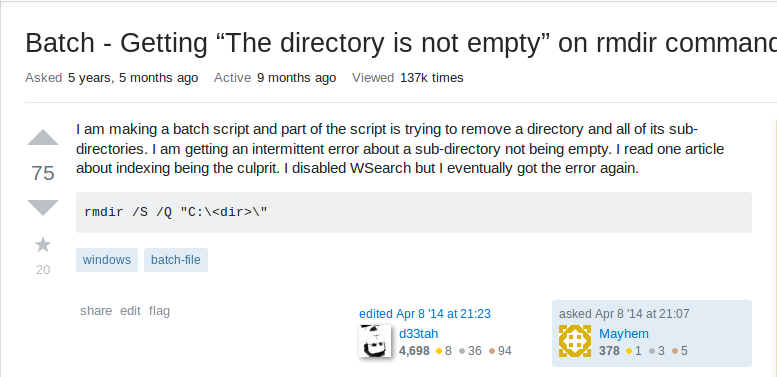

One of the most relevant searches leads to a Stackoverflow question titled Batch - Getting “The directory is not empty” on rmdir command:

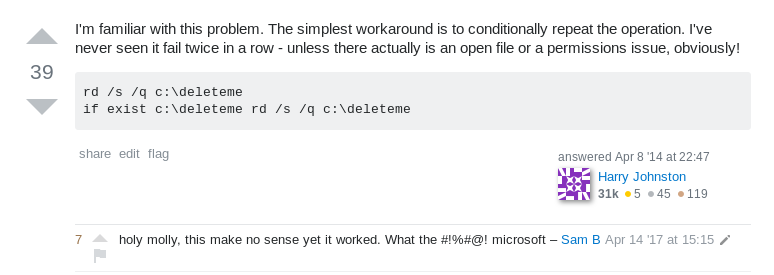

And the answer is … do it twice:

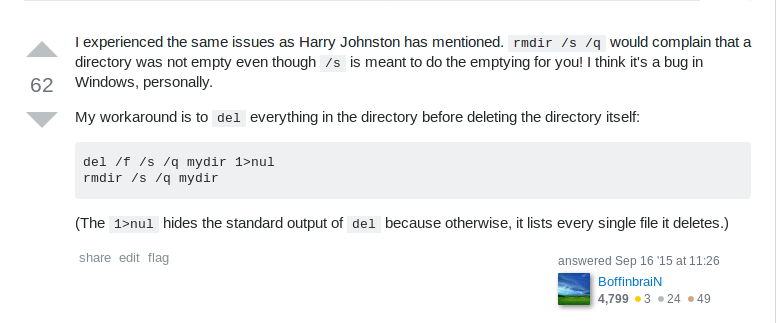

And the even better answer (with more votes) is … do it twice, but ignore errors and don’t log the first time:

That can’t be true, can it?

What’s happening

Windows has background services like the search indexer.

The purpose of this service is to allow a user to quickly find documents. A

user can do a search in Windows Explorer and a list of documents containing

those words can quickly be retrieved. This is achieved by the search indexer

monitoring the filesystem for changes. When a change is detected, it waits a

bit, to allow documents to be saved, then it tries to open the files where

change was detected, tries to extract text content and update an index that is

used for fast retrieval of search results. To minimize interactions with

applications the search indexer opens files with flags that maximize sharing

including FILE_SHARE_DELETE.

When we delete a file, if the file is also opened by another process with

FILE_SHARE_DELETE, the function DeleteFileW reports success, but in reality

the file is not yet deleted, it will be deleted when all the handles get

closed. If that does not happen by the time we try to delete the parent folder,

we get the error ERROR_DIR_NOT_EMPTY from RemoveDirectoryW.

What we see here is a consistency problem typical for distributed systems, except that we’re inside a single physical system with multiple threads. One way to look at it is that with regards to the question ‘does a file exist?’ there are multiple answers:

- There is the actual, deep, origin, answer checked by functions such as

RemoveDirectoryWandCreateFileW(which fails withERROR_ACCESS_DENIEDwhen creating a file on top of anther not deleted yet) - There is the shortcut, shallow answer checked by functions like

GetFileAttributesExW(used bystd::filesystem::exists) andFind...functions (used bystd::filesystem::directory_iterator) which will not see a file that is marked for delete, but not yet really deleted.

Solution

The solution looks silly: recursively delete, on errors wait a bit and retry once more. The reason it works is that like many other consistency problems is solved by eventual consistency.

You’re more likely to see this problem when deleting folders with many files and sub-folders. When deleting a small folder with a few files, by the time the search indexer wakes up the deletion completed.

It is important to continue on errors and try to delete as many files as possible. Otherwise if we stop on the first error, many files are left behind, and the at second attempt there is time again for the search indexer to wake up and catch up. And it has a good chance to catch up because it’s a bit like driving behind a snow plough: while we slowly delete files (the snow plough), the search indexer moves from one file to the next remaining file (driving behind the snow plough).

The same issue can happen with other applications, it’s not limited to the search indexer.

OS alternatives

The issue with errors on empty folder is caused by the Windows OS behaviour which reference counts access to a file and defers deletion until the reference count reaches zero, including removing the file from the folder contents.

Alternatively the file could be removed from the folder contents as soon as the delete API is called, while still deferring the other deletion activities to the time when the count reaches zero. This could still create surprises like the fact that removing files does not increase the available space on disk, which might be a problem in cases of almost full disk spaces.

My understanding is that this is the approach that Linux takes. Windows might take eventually this approach as well, at least to enhance support for Linux applications/emulation.

Conclusion

If there is something to learn from this series of articles is that removing files is more complicated than expected.